2016-07-11 »

So much scary stuff in here, but my favourite line is, "When I was in graduate school in the 1970s, n=10 was the norm, and people who went to n=20 were suspected of relying on flimsy effects and wasting precious research participants."

My wifi analyses have n=~millions of data points, and I still have no idea if I'm doing it right. The difference is they pay me either way so I have less incentive to go on a 20-year self-delusion binge.

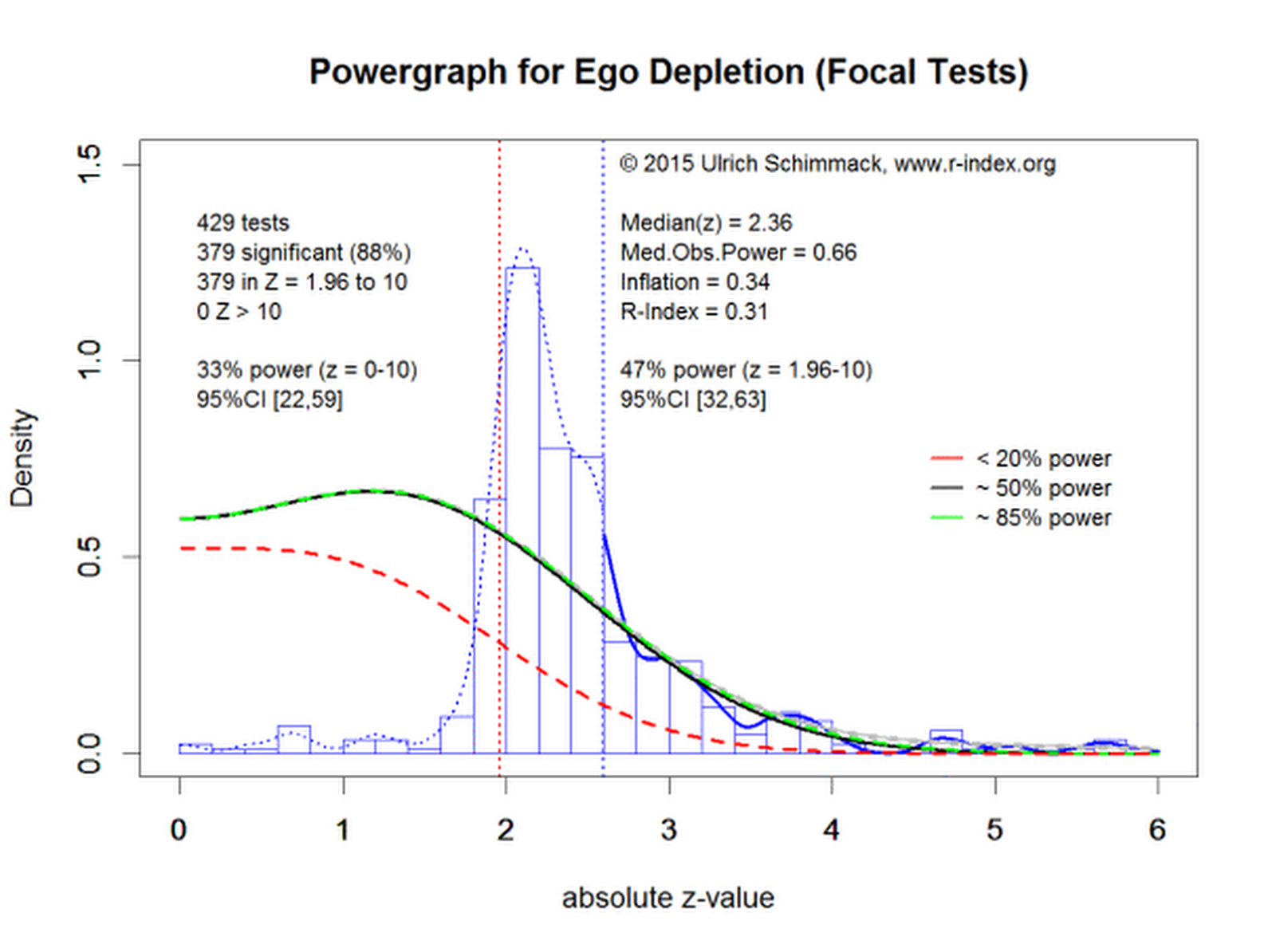

I like the meta-analysis method where they (to oversimplify massively) check for biased results by seeing if they are suspiciously close to the standardized publishing cutoffs. They can even, using methods I don't quite understand, detect the difference between publishing bias (we don't publish negative results) from methodology errors (eg. filtering outlier data points you don't like). It's neat.

Why would you follow me on twitter? Use RSS.