2013-10-05 »

This is my dream for how logs analysis should work, essentially.

http://www.artlebedev.com/mandership/167/

2013-10-06 »

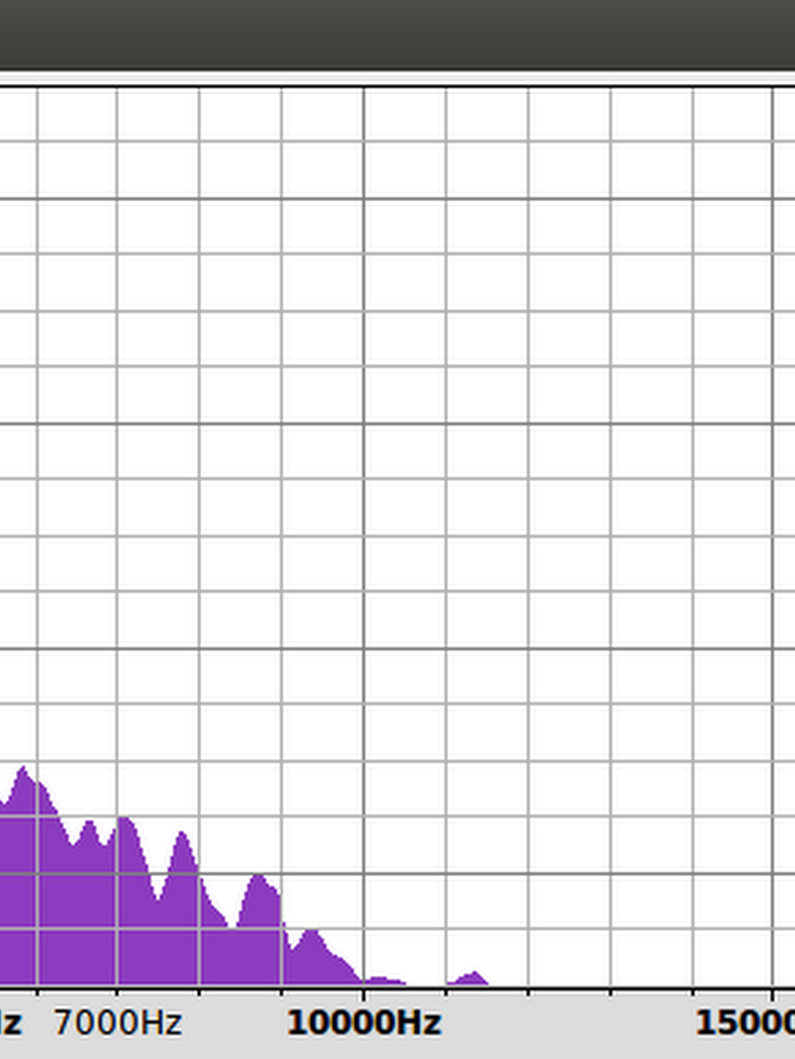

From our manufacturing team, an interesting plot of the FFT of fan noises in a good fan vs. a bad one.

2013-10-07 »

Imagine your whole program was a single source file, with all the bits #included into the one thing, with no private or static variables anywhere.

Now imagine it's about 34000 lines of source code.

Many of which are macro definitions and/or calls to those macros.

Now imagine it's not C, but GNU make.

That's buildroot.

2013-10-08 »

"...but note, channels 36..48 are limited to 50mW max power per FCC. Channels 52..140 (DFS) are limited to 250mW. Channels 149..165 allow the highest output power, 800/1000mW."

Good lord, this is getting complicated.

2013-10-09 »

What's the point of flash wear leveling, again?

Look at ubifs for example. When a block starts getting "old" and growing a lot of ECC-correctable errors, it marks the block bad pre-emptively and moves your stuff to a better block. If it tries to write to a block and it doesn't work, it marks the block bad and moves your stuff to a better block. So it's quite good at dealing with blocks that go bad.

Is there really anything statistically better about moving your stuff around pre-emptively, before the block goes bad, rather than just moving it around after the block goes bad?

As far as I can tell, once something is written successfully, you're unlikely to lose more than a few bits at a time, which ECC (and then UBI's auto-migration-with-too-many-ECC-fixes feature) can safely recover as long as you read your data every now and then to check on it. But maybe there's some block failure mode I'm not understanding.

2013-10-15 »

ath9k-htc firmware is open source

Atheros actually released the source code to the firmware blob for one of their chips?!? That's one of the most progressive things I've heard in a long time.

https://github.com/qca/open-ath9k-htc-firmware

2013-10-16 »

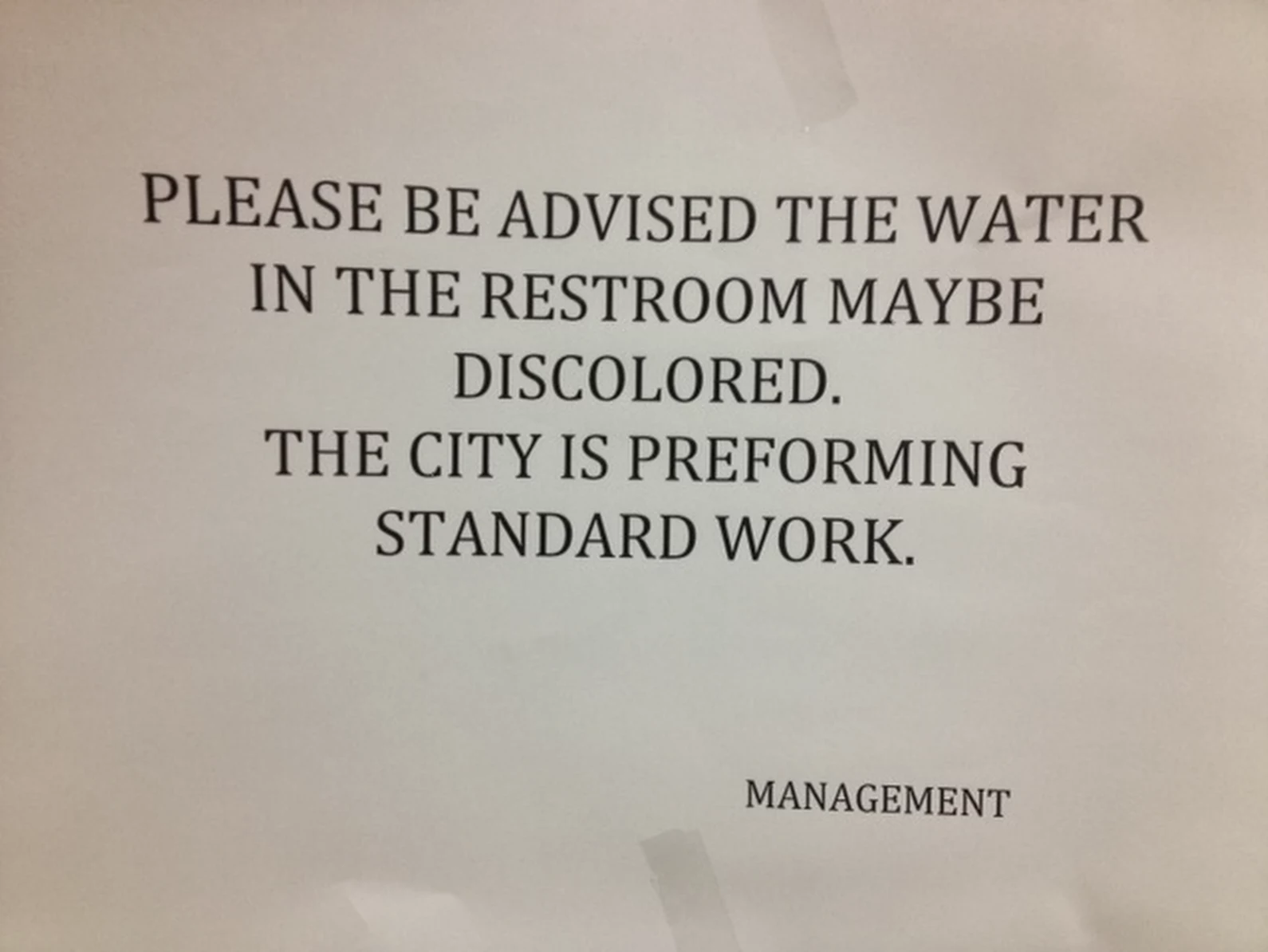

I feel that if the work were really so "standard" then you'd probably know for sure if the water should be discolored.

2013-10-23 »

"Doubling" wifi range with a new wifi standard?

Here's an article referenced by the 802.11n wikipedia article, supposedly justifying the claims that the maximum range of 802.11n is twice that of 802.11g. (125ft vs 230ft)

http://www.wi-fiplanet.com/tutorials/article.php/3680781

Here's the problem: they talk about "doubling the range" as meaning "the same speed at twice the distance or twice the speed at the same distance." Well, that sounds right, but it's better for marketing than for reality. Basically, the article demonstrates that this is true by just showing how, at short range, you can get 144 Mbit/sec instead of 54 Mbit/sec (yay, more than double!) or at slightly longer range, your 802.11n connection degrates from 144 down to, say, 57, which is still more than 54. Therefore it meets both criteria for longer range!

Sigh. I'm pretty sure both of those are just examples of being faster - which everyone knows 802.11n is, and it certainly is in my tests.

But if my definition of range is, for the sake of argument, "the maximum distance from the AP where you can get near-zero user-visible packet loss and < 50ms latencies," then using that definition, I can't see how 802.11n is any better at all. It certainly isn't in any of my tests. Which makes sense, because both 802.11n and 802.11g just give up and use the same slow-speed encoding at longer ranges.

To their credit, the "approximate indoor range" column in wikipedia has a [citation needed] despite the footnote pointing to the above article. (The article, incidentally, never mentions a specific distance measurement. Which makes sense because it would immediately reveal that they're using a lying definition for "range.")

There. Just had to get that off my chest. I'm pretty sure that article - and a series of others from when 802.11n was released - is responsible for the persistent rumours that 802.11n has double the maximum range of 802.11g. It doesn't. Not even 10% more. Bah.

(Disclaimer: it's possible that chipset improvements from the same time period did improve range just by being better-made chips. This doesn't seem too likely though, since I have an ancient 802.11g Linksys router here that I also use for testing, and it certainly is no worse than the new ones in terms of maximum range.)

2013-10-28 »

Daniel tested our MoCA 2.0 support, and actually got ~390 Mbit/sec TCP out of the theoretical MoCA 2.0 maximum of 400 Mbit/sec. That's amazing. That never happens.

What this means is that it could also be plausible, in the not too distant future, to use dual-channel bonded MoCA (using two bands at once) and get the actual rated 800 Mbit/sec. Yowza.

Clearly the MoCA alliance needs to hire some of the marketers from the wifi alliance, who manage to say 450 Mbit/sec when they mean 300 Mbit/sec, and they get away with it. Plus their ratings are done using wires instead of antennas. I'm not even kidding.

2013-10-30 »

Okay, it's official. Wifi is my contribution to the Peter Principle. Except I didn't even get promoted first!

Doom.

2013-10-31 »

In other news, PC viruses don't exist because it would be impossible to install software so quickly and reliably using InstallShield.

http://www.rootwyrm.com/2013/11/the-badbios-analysis-is-wrong/

Why would you follow me on twitter? Use RSS.